Written on June 5, 2023 by Rodrigo Radtke de Souza

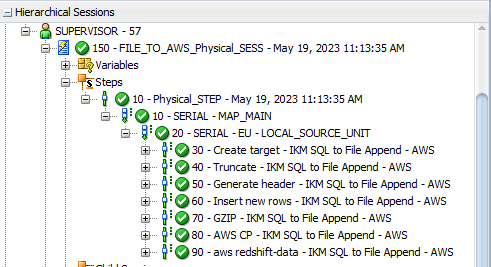

Hi all, probably this is the last post of this series on how to load data from on-premises databases to the major public clouds providers. Today’s post is about Amazon Redshift. Right now, we all know the pattern that we are following: We will duplicate “IKM SQL to File Append” and add some extra steps at the end of it to push the file to the cloud and load the target table. It will look like below:

The first extra command is GZIP, which is the same as the other posts. After we GZIP the file, we will push the file to a S3 bucket using the CP command. To do that, you will need to create a S3 bucket in AWS and install AWS CLI (which you can read about it here) in your ODI server. The AWS CP command is very simple and looks like this:

The next command (aws redshift-data) is a little more complex. redshift-data is an API (read about it here) used to manipulate data in redshift. One of its commands is execute-statement (read about it here), which can be used to issue a copy command (read about it here), that copies a file from a S3 bucket to a redshift table.

The command may vary depending on your setup, but in resume, it will look like this:

aws redshift-data execute-statement --database <database_name> --workgroup-name <workgroup_name> --region <region> --secret-arn <secret_arn> --sql "copy <target_table> from 's3://<bucket>/<file_name>.gz' credentials 'aws_access_key_id=<access_key_id>;aws_secret_access_key=<access_key_secret>' delimiter '|' IGNOREHEADER 1 ACCEPTINVCHARS GZIP region '<region>'"Notice that there are a lot of variables to fill in. secret_arn, access_key_id and access_key_secret are all related to security and depending on your setup, you may even use different ways to authenticate and run the command. But in resume, you will request redshift-data api to run a execute-statement. This execute-statement will trigger a copy command to a target table from a s3 bucket GZIP file. When you run the mapping, it looks like this:

That’s it folks. I hope you have enjoyed this series. See you next time!