Hi all, how are you doing? It has been a long quiet period here in the blog and the reason is always the same: too much work, projects, personal things and so on. To “force” myself in getting some time to write in the blog (while I still have the “new year” feeling), I’ll start this series of ODI and Groovy development. Not sure how many posts I’ll write, but it will be a step by step on how to create your own ODI utilities using Groovy scripts. We will start from looking on the necessary tools that we will need to use, and the final goal is to have an ODI utility to solve a specific ODI developers’ problem. Let’s start then.

So, what is the problem that we are trying to solve?

ODI developers knows that, besides all their project’s problems, they need to deal with boring/repetitive/error prone daily activities that are often underestimated by people and that may cause big issues over time in large ODI environments. One of those simple (yet boring) tasks is to keep ODI environments in sync regarding to ODI scenarios. How to make sure that all ODI scenarios in my DEV environment were already migrated to TEST or PROD? What should have been deployed but was not? How can I see a list of those differences and figure out who/when that was done?

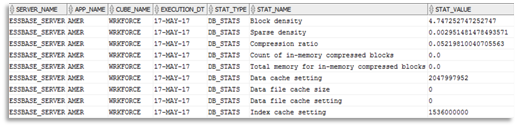

Almost every time that I need to answer one of those questions, I go to ODI repository metadata tables and start to write down queries to get the necessary information and compare them between the different environments. Although it works, it is time consuming, its manual and I need to have access to read the ODI metadata tables, which is not possible in a lot of places due to security reasons.

So, thinking about all that, I decided to create my own ODI utility that can connect to different ODI repositories, compare what is different between them and deploy any missing scenario that I wish to deploy. Although the idea sounds simple, it’s a pretty useful tool that may save us a lot of time and it can be reused in any project that you work on. Also, it can serve as a base for you to create any ODI utility that you may want to, so you can make your job more productive and automate all the boring/manual tasks.

Ok, you have convinced me. So, what do I need to get it done?

As the title of this post mentions, you will need ODI SDK libraries (they come as part of ODI install), Groovy/Java and a tool to write down your code. I choose Eclipse IDE because I was more familiar with the tool from my past Java developer days, but you can use anything that you want. In fact, ODI already comes with a Groovy editor that you could use, however it is a very basic editor that won’t give you a lot of the cool stuff that all those modern Groovy/Java IDEs can provide to you, like code completion, automatic library imports and so on.

You mentioned Groovy/Java. Why?

That’s a good question, since some people get confused about those two languages. As I’ve written before, in the way past, I used to adventure myself in Java code as a developer, which got very limited to a few scripts now and then once I started to work with data integration. At first, I thought about creating the utilities all in pure Java (due to my background), but ODI already comes with Groovy support, so I decided to look at it. Although I knew what Groovy concept was, I had never worked with it, so I had to do some study before start dealing with it.

Groovy is (a resume from its site): a powerful, optionally typed and dynamic language, with static-typing and static compilation capabilities, for the Java platform aimed at improving developer productivity thanks to a concise, familiar and easy to learn syntax. It integrates smoothly with any Java program, and immediately delivers to your application powerful features, including scripting capabilities, Domain-Specific Language authoring, runtime and compile-time meta-programming and functional programming.

Some key points that we should take from this resume and that drove my decision to use Groovy was:

- Simplicity and Dynamicity: you can write the same code in Groovy compared to Java with less line codes, so it gets faster for you to code and read. Since its simpler, its great for writing concise and maintainable automation tasks/scripts (which is our goal here).

- Smooth Java integration: Seamlessly and transparently integrates and interoperates with Java and any third-party libraries, which means that it is very easy for Java developers to learn and use Groovy.

There are other benefits/drawbacks about using Groovy over pure Java, but since ODI supports Groovy and its simpler to code (specially to create small automation scripts), those seemed good compelling reasons for me to use it.

Installing Eclipse with Groovy support

I’m going to describe here the steps to have Eclipse working with Groovy, so if you are using another IDE or even coding directly in ODI Groovy editor, you may skip this part. Installing Eclipse (Eclipse IDE for Java Developers) is very straight forward. You just need to go to Eclipse site and install it. However, Eclipse is aimed for Java development, not Groovy, which needs additional steps to get it installed in Eclipse. So, I read this site and replicated step 3, installing the “Groovy Eclipse plug-in” manually. I also executed step 6 just to make sure that my Eclipse/Groovy install was correct.

Connecting to ODI

Let’s create a script that simply connects to an existing ODI instance, just to validate our Eclipse/Groovy/ODI SDK installation. First, we need to import the necessary Jar files to our Groovy project in Eclipse. Right click and select “Build Path/Configure Build Path”:

On Libraries, select “Add External Jars”:

There are some Jars that you will need to import to make it work. Here is the list:

- Go to “Path to your ODI install\odi\sdk\lib” and import all Jar files from that folder;

- Go to “Path to your ODI install\oracle_common\modules\oracle.jdbc” and import ojdbc8.jar from there;

- Go to “Path to your ODI install\oracle_common\modules” and import all javax* jar files. Those are only needed to clear some weird warning messages that appears when connecting to ODI repository using Eclipse;

Now one important step that needs to be done if you are using Eclipse and ODI SDK Jar files. Once you import the above list, click on “Groovy Libraries” and click in “Remove” as below:

This “removal” will remove the Groovy Libraries that were added as part of Groovy plugin install that we did before. This removal is needed because ODI SDK libraries already contains Groovy libraries and they may conflict if they are in different versions. Below is an example of what happens if you don’t do this removal step in Eclipse.

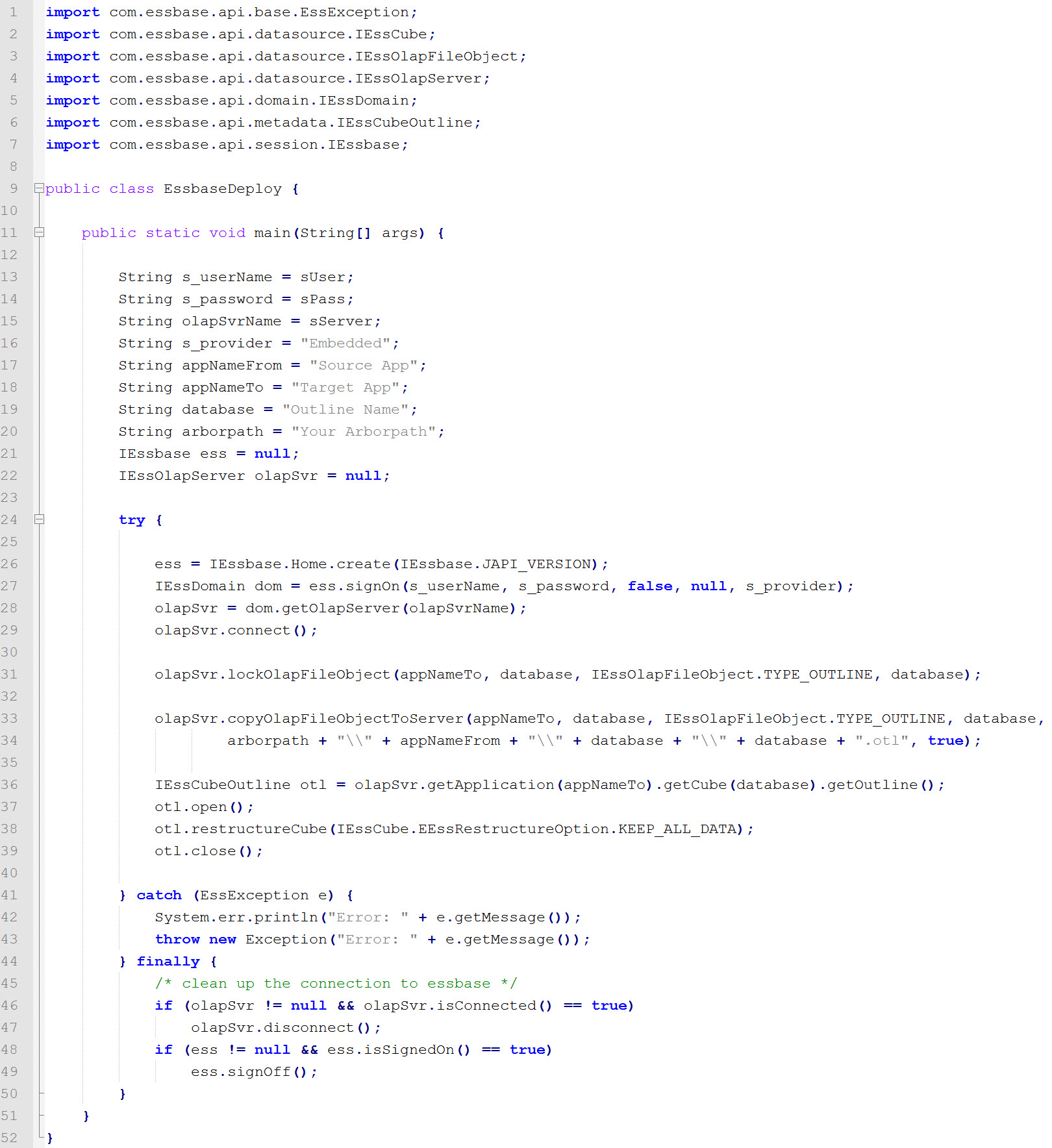

The code to connect o an ODI instance is very simple as we can see below. It imports a few libraries, create some variables that will be used as the login information and gets authenticated in the Master/Work repository.

import java.util.logging.Logger;

import java.util.logging.Level;

import oracle.odi.core.OdiInstance;

import oracle.odi.core.config.MasterRepositoryDbInfo

import oracle.odi.core.config.OdiInstanceConfig

import oracle.odi.core.config.PoolingAttributes

import oracle.odi.core.config.WorkRepositoryDbInfo

import oracle.odi.core.security.Authentication

logger = Logger.getLogger("oracle.jdbc");

logger.setLevel(Level.SEVERE);

sourceUrl = "jdbc:oracle:thin:@YOUR_SERVER_INFO";

driver = "oracle.jdbc.OracleDriver";

sourceSchema = "DEV_ODI_REPO";

sourceSchemaPwd = "XXXXXXXX"

sourceWorkrep = "WORKREP";

sourceOdiUser = "XXXXXXXX";

sourceOdiUserPwd = "XXXXXXXX";

sourceMasterInfo = new MasterRepositoryDbInfo(sourceUrl, driver, sourceSchema, sourceSchemaPwd.toCharArray(), new PoolingAttributes());

sourceWorkInfo = new WorkRepositoryDbInfo(sourceWorkrep, new PoolingAttributes());

sourceOdiInstance = OdiInstance.createInstance(new OdiInstanceConfig(sourceMasterInfo, sourceWorkInfo));

sourceAuth = sourceOdiInstance.getSecurityManager().createAuthentication(sourceOdiUser, sourceOdiUserPwd.toCharArray());

sourceOdiInstance.getSecurityManager().setCurrentThreadAuthentication(sourceAuth);

println("Connected to ODI! Yay!")

When we execute the code (Run/Run As/Groovy Script), we can see that it connects successfully to our ODI instance. You may also decrease the ODI log level if you don’t wish so many details, but as for now, I’ll leave it as is.

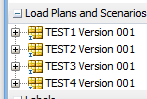

That’s it folks for our first post. Next one I’ll talk about how to get all ODI scenarios, load plans and folders and display it in a tree component, similarly on what we have in ODI Operator.

See ya!