When I started working with ODI it was in version 10, and back there we had a few bugs, the UI was good (back there we could change the expressions and we didn’t have to take out the focus to save the changes, for example) , everything worked well, we could write a variable name with upper or lower case, the metadata navigator worked very well and that was one of the things that made the users choose ODI instead of power center Informatica, because they had an easy way to run their interface at will, and some other good things. It was a very stable version of ODI, good times.

Then 11 version came out. Well, the first thing we noticed was the UI, and the huge amount of bugs that came with it, and most of them in the interface screen. In 11 version, if you try to delete a filter, all the other filters disappears, but they are still there, if you close and re-open the interface they’ll come back, if you change a expression and doesn’t remove the focus from the filed it’ll not commit the changes, if you delete a datastore and put a new one (because some model changed for example) you have a good chance to not be able to save the interface for some bizarre error and you need to do this operation over and over, the variables name must be upper case for some odd motive, and other things. Another big loss was the metadata navigator that was replaced by ODI Console, a worse version with so many bugs that we had to stop using it. Some bugs like lack of security (everybody could see everything), all the execution ran as supervisor, we couldn’t see the load plans (only place where the security works), we couldn’t see the variables and lots of other things.

BUT despite of that, the functionalities for the DEV team were almost the same.

Now we have a new version of ODI. The 12c. Ok, this is only our first impressions and we could have been doing something very wrong (and I pray for this). When a software changes its version and two specialists takes more than 30 minutes trying to figure out why and how or what they need to do to sum a column in an interface or should I say mapping (yes, this is the new name, I liked it and this is one of the few things that made sense to me in this new version), something is very wrong with it.

Ok let’s start from the beginning. When I started to work with datamart, the first tool that I used was OWB, and after some time when I started to use ODI to make some integration, I really missed some stuffs from OWB. It makes sense to get this two tools and merge it together. From OWB we had a cool mapping process that makes easy to understand what that transformation is doing, multiple targets, and a few other things that I missed in ODI, BUT, ODI has the Agent that allow us to connect anywhere without the need to create a heterogeneous service or a dblink or other stuff like that, it has more flexibility (and when I say more you can understand infinite more), we don’t need to deploy the mapping to create a procedure in a oracle database to integrate something, what makes the development test super-fast, we have a lot of components, well, ODI is so much better in this aspect that the few things I missed doesn’t bother me at all.

So in this new version they tried to merge the two tools. What looks good in the paper (I mean blogs and documentations) looks terrible in ODI.

We installed it this weekend to see what happened in this new version and we saw a very different workspace for the interface, I mean for the mappings. This simple ODI UI…

ODI 11g Interface UI

Turned into this:

ODI 12c Interface UI

Humm looks good right? Well, yes and no. I’m working in a 60” full screen TV and I need to drag the screen left and right, up and down to make everything visible, poor devs that has a screen smaller than mine.

Ok but this is only layout, everything else should be better right? Well, unless we were doing something very wrong they put a lot of more complexity to solve some issues that we were able to solve very quickly since version 10.

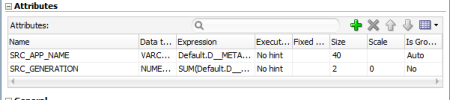

First of all, in all the other versions if you want to sum something in ODI you just need to get the expression in the target datastore and put a SUM() function on it. ODI would do the group by for you and everything is ok.

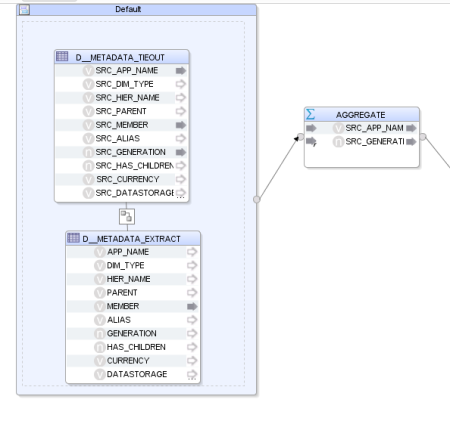

ODI 11g Sum

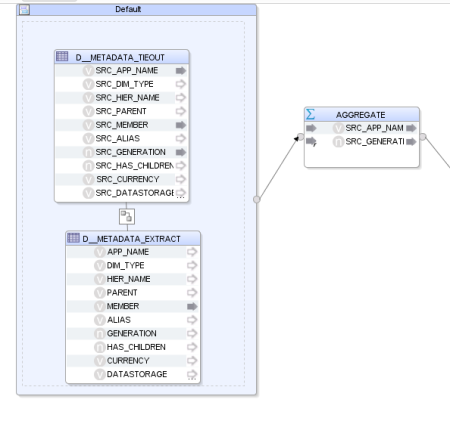

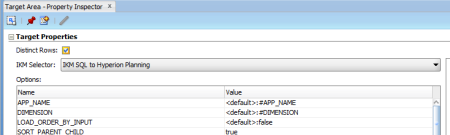

In the new version you need to drag an object called Aggregate, put all the columns that you want to map trough this (like in OWB), change the options of this object, and in the end, put the same SUM() expression in this object instead of in the target datastore.

ODI 12c Sum Part 1

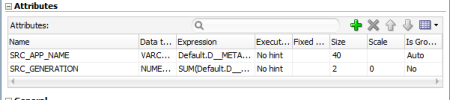

ODI 12c Sum Part 2

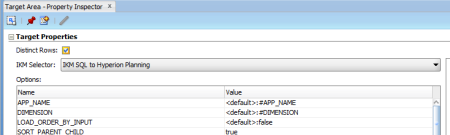

ODI 12c Sum Part 3

If you try to put the expression as before (<=11g) it’ll not create the group by and you’ll not be able to run the interface because it will just simply fail ….

Well, at least in OWB when you use the Aggregate object it’ll aggregate the columns that you define without the need to write the SUM() function. Why they put this new complexity? Ok you can execute this SUM() in another place different from the source or the target but still…

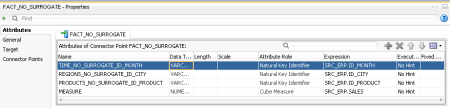

We have some other components that we need to use in the interface right now.

ODI 12c Mapping Components

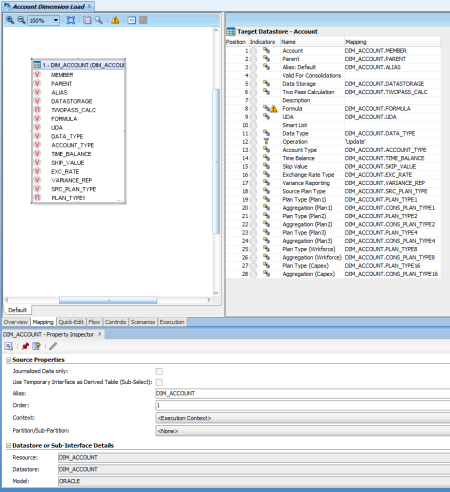

The dataset is used when you want more than one dataset in the source (we already have it in the 11g, the difference now is that you need more screen to manage it but the bright side is that you’ll not forget to change anything because you missed the datastore tab like in the old version [yes I did it a lot])

ODI 11g Datastore

ODI 12c Datastore

The distinct component does not need to be explained, the only thing I had to say is that in the old versions you need only to flag it in a simple check box and now we need to add a distinct component in the flow, drag all columns to it and them drag those columns again to the target. A complete waste of time.

ODI 11g distinct

ODI 12c Distinct

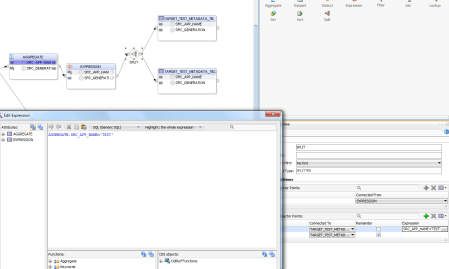

The expression… well, almost the same as Aggregate. Now instead of just write any expression in the target datastore you may add this object in the flow, BUT it will work if you just write the expression on the target. So, why do we need to have this additional object???

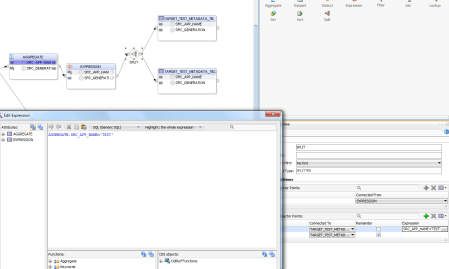

ODI 11g Expression

ODI 12c Expression

For the filter, join and lookup table nothing changed.

ODI 12c Lockup

The set is to define the type of union you can have between the datasets, same as before but now it’s in the mapping too.

ODI 12c Set

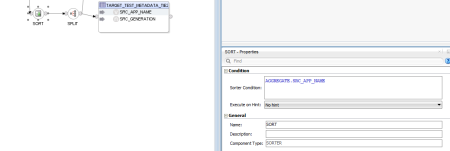

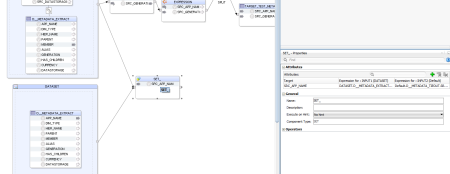

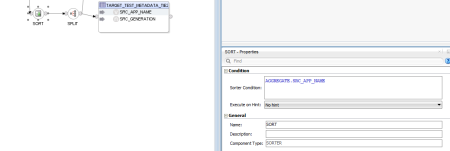

We now have a sort component, so now we may stop doing “SQL injection” or “KMs changes” for a simple order by component (of course I liked this one).

ODI 12c Sort

And the Split component. This one is what I missed the most in OWB. This allow us to say something like: if the DIMENSION is Account, all the data goes to DIM_ACCOUNT, DIMENSION = ENTITY then DIM_ENTITY, the others goes to DIM_OTHERS for example.

This is a cool thing but easily done using our command on source and target in a procedure (See this post 10 Important Things to Improve ODI Integrations With Hyperion Planning Part 2).

ODI 12c Split

As we can see a lot of things were made for this version, but all this things makes it unusable. Really, in the old versions I already tried to not use interface, only if was absolute necessary, because they are time consuming, inflexible, hard to maintain and there’s nothing you can’t do in a procedure that is a lot better and faster to create then interfaces, in fact I only use interface when I want to use the CKM to use some constraints for data quality, nothing I can’t do in a procedure, but this is for sure something easier to achieve using interfaces. Despite of that, everything else I prefer to use a procedure, mainly because I can get rid of the models, that looks good, but for me they are the true villain of ODI. Models are hard coded information, and I hate hard code.

In resume, in this new version things that were relative simple to use, now are a nightmare to create. Of course that things will get more visual but the developers will pay a very high price for that cool looking. Oracle just added foolish complexity on things that were simple and that worked very well. Do you want a final example? On prior versions of ODI, you would import all KMs that you needed for that specific project and you would pick one of them from the combo box on the flow tab. If you needed to change it later, just pick another one from that same list and that’s it:

ODI 11g KM Selection

On version 12, first you need to be on the Logical tab of the Mapping object, click on the target table to get its focus, expand “Target” properties on the right panel and select its target “Integration Type”. This type will filter which KMs you will be able to see in the Physical tab:

ODI 12c KM Selection 1

In the Physical tab, click again on the target table, expand “Integration Knowledge Module” and select one of the KMs of that type that you filtered in the previous tab:

ODI 12c KM Selection 2

And what happens if you want to change the KM? If it is from a different type, first you need to go to the Logical tab, change its type, go to Physical, and select another KM. Ok, they have categorized the KMs and this is a good thing but why they didn’t add the Integration Type in the same tab of the KM selector??? Now we need to go back and forth without any good aparent reason and if you are in doubt on which KM to select and you want to read their descriptions to see which one best fits your needs, then you are totally screwed.

But there are two really cool things about this 12c version. First one debugger! Finally they added a debugger to ODI! This feature was a long waited one because it was simply terrible to debug things in ODI. Now you can go execute the code step by step, take a look in the variables content for that session and you can even query uncommitted data through the transactions:

ODI 12c Debug

Second cool stuff: Roles in Security Module! Again, another long awaited simple feature that did not exist until now. Roles are similar to Groups where the security added to a Role is replicated to all users that belongs to that Role. This is great, because in the old days, Security configuration was madness with a lot of manual configuration. Finally now we will have a better Security framework to work on it.

ODI 12c Roles

Well, there’s a lot of thing to see in this new version yet, but the first thing wasn’t pretty. I didn’t uninstall it yet. Let’s see if we can find anything good that justify this living hell that the interfaces (mappings) turned out to be.

If someone of you learn something different or get a different idea for this new version please let us know because I still don’t believe that these changes happened and this is the way Oracle wants us to work from now on. (By the way the UI for procedures are different too and for now I’ll not say if I liked it or not because normally we need some time to get used to it [but I didn’t like it J]).

This weekend we’ll try a migration and let our impressions here.

See you guys!

button will create a Level.

button will create a Level.