Hi all! Continuing our series, today we will talk about how to load data into BigQuery using ODI. There will be two posts, one talking about Simba JDBC and another one talking about Google Cloud SDK Shell. You will notice that the first approach (using Simba JDBC) has a lot of challenges, but I think its worth looking at it, so you have options (or at least know why you should avoid it).

First, you need to download Simba JDBC and add it to your ODI client/agent (like what was done with Snowflake JDBC here). Also, you need to “duplicate” one ODI technology (I used Oracle in this example) and name it as BigQuery:

In JDBC Driver, you will add the Simba driver and in URL you will add your connection to Google BigQuery. I’ll not go over the details here, since there are a lot of ways for you to create this URL, but you may check all the ways to create it in this link.

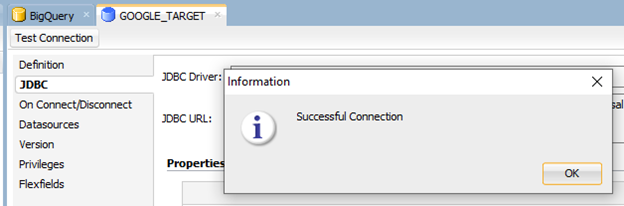

Press “Test Connection” to see if it is all working as expected:

Create a new Physical schema and give it the name of your BigQuery Dataset:

Create a new Logical Schema and a Model. If all is correct, you will be able to Reverse Engineer as you normally do with other technologies:

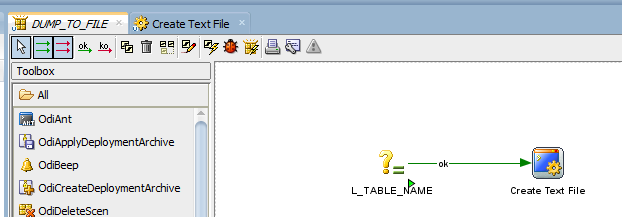

Let’s go ahead and just create a new mapping. This is a simple one: getting data from an Oracle database and loading it to BigQuery:

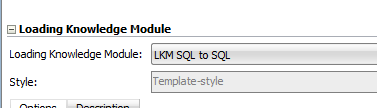

As LKM, I’m using LKM SQL to SQL:

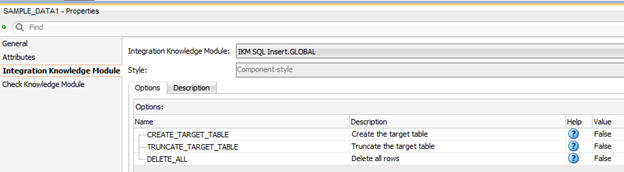

As IKM, I just used a Global one:

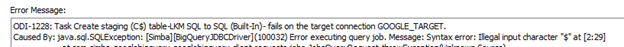

When I ran it, it fails. If we check Operator, we will see that it couldn’t insert new rows because it was not able to create the C$ temporary table. If we look further, we see that it considers “$” as an illegal character in BigQuery tables:

If we get the create table statement from ODI Operator and try to execute in BigQuery, we will see a lot of issues with that:

First, as I said before, we cannot have $ signs, so let’s try to remove it:

It also does not accept NULL. Removing them gave another error:

Varchar2 is not a thing in BigQuery, so if we change it to String, it will work fine:

So, just for us to create a temporary C$ tables, we had to do several changes to it. It means that ODI/BigQuery integration is not straightforward as we did for Oracle Cloud or Snowflake. We will have to do some changes and additions to BigQuery technology in ODI. Let’s start with $ signs. Double click BigQuery and go to Advanced tab. There, lets remove the $ sign from temporary tables (I removed only in C$ for this example):

Also, you need to remove the DDL Null Keyword:

You may need to do way more changes depending on what you need to load, but for this example, we will try to keep as simple as possible.

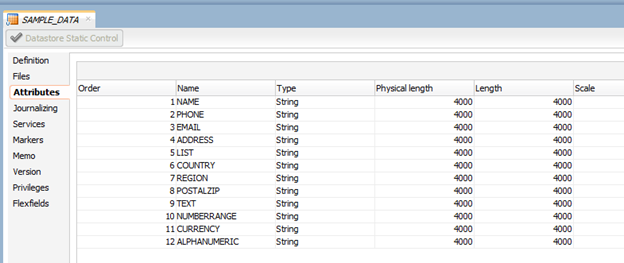

Next step is to create a String Datatype. You may go to BigQuery technology and duplicate and existing Datatype. For this example, I used Varchar2. Then I changed the Name/Code/Reverse Code/Create table syntax/Writable Datatype syntax.

Another step is to change our source technology, in this case Oracle. We need to do that because ODI needs to know what to do when converting one datatype from one technology to another. We do this by going to Oracle Technology and double clicking in each source datatype that we have and what they compare to the new target technology (BigData). For this example, we will only be getting Varchar2 columns to String columns, so we will just make one change. In a real-world scenario, you would need to change all the ones that you use. Double click Oracle’s VARCHAR2 Datatype, click on “Converted To” tab and select String on BigQuery Technology.

One last change is to go to our BigQuery datastore and change the column types to String:

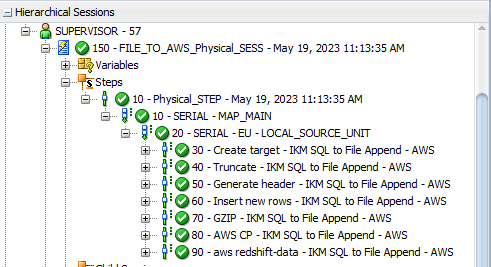

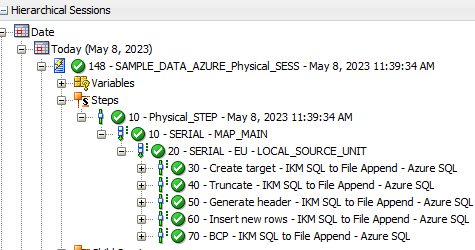

This should be enough for us to test the interface again. When we run it, no error happens this time:

However, the data load takes forever to complete (I cancelled after some hours trying to run a 1 M rows table). The ODI job was able to create the work table and I could see data flowing in BigQuery, but the speed was just too slow:

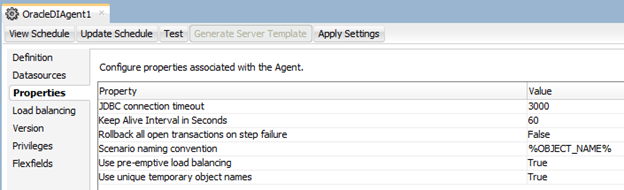

I tried to change the Array Fetch/Batch Update size/Parallelism in the Google BigQuery Data Server ODI object, but none of the values that I tried seemed to work fast as I wish:

Not sure if the slowness was due to something in my architecture, but at this point I just thought that, even if worked reasonably fast, it wouldn’t be as fast as creating a file and send it directly to BigQuery (similarly on what we did for Snowflake). Also, the amount of customization is so high to load a simple table, that I really don’t want to think on how bad it will get when we get to some complex mappings.

Not surprisingly, my tests with text files were extremely fast and the customizations needed are minimum compared to the JDBC method, so that’s what we will be covering in the next post.

Thanks! See you soon!