Hi all. I’ve decided to take a little break in our Hyperion Planning series to talk a about a “confusing” (and underestimated) feature in ODI: Optimization Context.

This is a very important feature but few people know how to use it correctly. This setting is used in design time to make ODI aware of how it should build its code. Let’s take a look at the following example:

For some reason (budget constraint, laziness, etc) the sales database schema that you want to read is in the same data server then your DW schema that you want to load in development. In production the situation changes and you have two separated data server, one for each schema.

Let’s say that we have two logical schemas, one for Sales (LOGICAL_SALES) and another for DW (LOGICAL_DW). In Development we created the following Physical Topology:

In Production we have this:

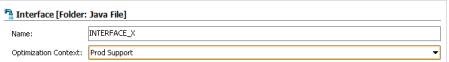

I will not go through all the small steps to replicate this here, but we need to set up the Physical/Context/Logical information, create the data models and reverse the tables. When we build the interface, things start to get interesting. Let’s take a look at the interface using the “Development” context in Optimization Context:

In the Flow tab we have only one IKM to select because in the Development context our source and target physical schemas reside in the same data server. ODI uses the Optimization context to figure out how it is going to build its flow tab. Let’s change the Optimization context to Production now:

Well, it changed. Now we have one LKM and one IKM. Again ODI checked the Optimization context to build its flow tab. As Production has two separate data servers, ODI added a LKM to be able to load from one server to the other. So the Optimization context is the key parameter to let ODI know how to build its code. And it can be very tricky.

Imagine that we change back to Development Optimization context. Now we have just one IKM in the flow tab. Let’s save it and generate a scenario with that interface. If we run it using the Development context it will work fine because both schemas are in the same data server. But what happens if we run it using the Production context?

It failed. If we check the steps, we have just one “Insert new rows” that tries to insert into DW schema using a select in the Sales schema:

As in Production the schemas are in separated data servers and ODI created its flow tab using the Development Optimization context this code will never work. If we change the Optimization context to Production, regenerate the scenario and run it again, it will create additional LKM steps and it will work fine:

It is worth mentioning that the opposite is also true. Imagine that in Development you have different data servers, but in Production you have only one data server. If you use Development Optimization context ODI will create its code with a LKM and it will add extra steps that would not be necessary in the Production environment. This wouldn’t give you any error, but these extra steps will be redundant and will result in less performance.

So this concludes our post. Prior to select your Optimization context, find out which is your topology in all environments. In this way you will be sure that ODI will create the correct code for all environments without any extra steps or errors.

See ya!