Written on March 23, 2023 by Rodrigo Radtke de Souza

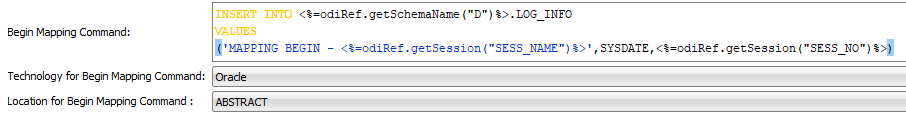

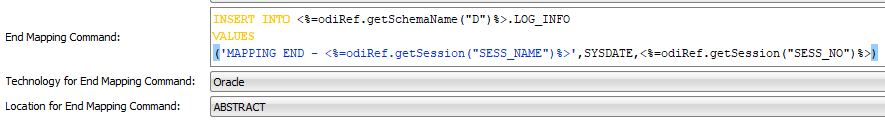

Hi all. Today’s post is an “enhancement” of one post that I did a long time ago regarding using Oracle’s metadata to build dynamic code. I say it is an enhancement because that technique would use the source/target command tabs and it has a 4000 character limitation. So, if you are trying to build some metadata information that crosses this limit, it will fail.

You should be good using source/target command technique in 90% of your data loads, but there are a few exceptions that we need to go beyond 4000 characters. One of these examples is when you are trying to build dynamic code to generate text file loads in ODI. The example that I’ll show here is about dumping the data from any Oracle table to a text file dynamically, using only one ODI procedure.

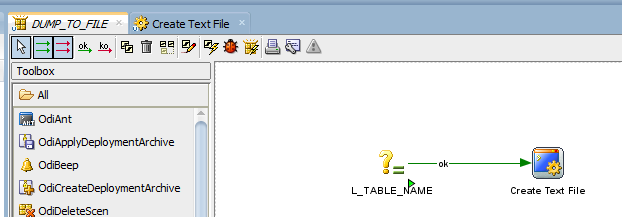

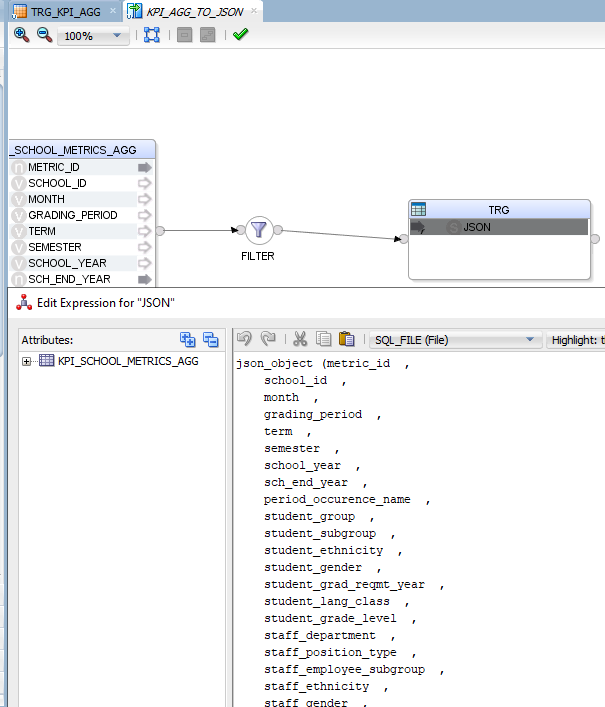

This is what we will build. I’ll go over each step in detail:

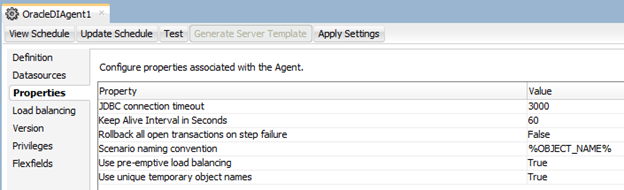

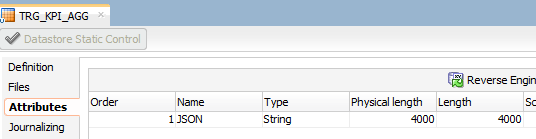

Basically, it is an ODI procedure that will receive a table name as a parameter and it will write that table data into a text file using pipe “|” as delimiters. This is a very small but useful procedure that you may use when you need to create fast text files from tables/views without going through all the work to create ODI mappings for it.You will need two Logical Schemas, one for the source table and another one for the target file. In this example I used LOCAL_SOURCE that points to an Oracle database and OUTPUT_FILE that points to a folder where the text file will be created.First step is to get the file metadata. As I said, this will be done in a different way now. Instead of using source/target tab commands, we will use Java BeanShell to retrieve this information. This is because ODI creates a very lengthy metadata for each column and the total amount of metadata often crosses the 4000 characters limit. Just as an example, each column in a file text load looks similar to this:

"CRDWG_COLSNP$CRCOL_NAME="+metaDataSrc.getColumnName(i) + "SNP$CRTYPE_NAME=STRINGSNP$CRORDER="+i+"SNP$CRLENGTH=4000SNP$CRPRECISION=4000SNP$CRACTION_ON_ERROR=NULLSNP$"Here is the full code for the “Get File Metadata” step:

<@

import java.sql.*;

String table_name = "#DWCLOUD.L_TABLE_NAME";

String folder_path = odiRef.getSchemaName("OUTPUT_FILE","D")+"/"+table_name;

String folder_path_txt = folder_path+".txt";

String table_schema = odiRef.getSchemaName("LOCAL_SOURCE","D");

String table_metadata = "/*$$SNPS_START_KEYSNP$CRDWG_TABLESNP$CRTABLE_NAME="+table_name+"SNP$CRLOAD_FILE="+folder_path_txt+"SNP$CRFILE_FORMAT=DSNP$CRFILE_SEP_FIELD=0x007cSNP$CRFILE_SEP_LINE=0x000D0x000ASNP$CRFILE_FIRST_ROW=1SNP$CRFILE_ENC_FIELD=SNP$CRFILE_DEC_SEP=SNP$CRSNP$";

String table_header = "";

String table_header_parameter = "";

Connection ConSrc = DriverManager.getConnection("<%=odiRef.getInfo("SRC_JAVA_URL")%>","<%=odiRef.getInfo("SRC_USER_NAME")%>",odiRef.getInfo("SRC_PASS"));

Statement stmtSrc = ConSrc.createStatement();

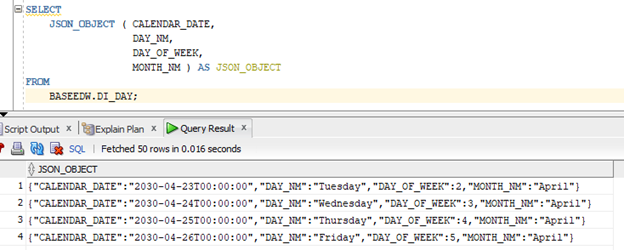

ResultSet querySrc = stmtSrc.executeQuery("select /*+ parallel(64) */ * from "+ table_schema +"." + table_name + " where 1=2");

ResultSetMetaData metaDataSrc = querySrc.getMetaData();

int count = metaDataSrc.getColumnCount();

for (int i=1; i<=count; i++)

{

table_metadata = table_metadata + "CRDWG_COLSNP$CRCOL_NAME="+metaDataSrc.getColumnName(i) + "SNP$CRTYPE_NAME=STRINGSNP$CRORDER="+i+"SNP$CRLENGTH=4000SNP$CRPRECISION=4000SNP$CRACTION_ON_ERROR=NULLSNP$";

table_header = table_header+metaDataSrc.getColumnName(i);

table_header_parameter = table_header_parameter+":"+metaDataSrc.getColumnName(i);

if (i==count)

{

table_metadata=table_metadata+"CR$$SNPS_END_KEY*/";

} else

{

table_metadata=table_metadata+"CRSNP$";

table_header = table_header + ",";

table_header_parameter = table_header_parameter + ",";

}

}

ConSrc.close();

@>

How it works? It uses a variable (that you will use in an ODI package later on), to figure out which table it will dump into a file. It actually can be other objects that you can query, like a view. Views are actually great to be used here since you may create any kind of business logic and filters on the view and dump the data to a file when needed. It also uses both logical schemas (source and target) to figure out where to read the source data and place the target file.

It then creates a connection object using the source connection that you set in the ODI procedure (please pay close attention to each source/target logical schema in the ODI procedure) and it runs a query where 1=2 just to retrieve the table/view metadata.

Having this metadata in the result set, it loops through it and starts to “build” the dynamic code for the header and the columns. It does some special conditions when it reaches the last column and then it closes the connection.

It may sound complicated, but it’s fairly simple. In less than 40 lines of code we are able to retrieve all metadata to dump any table’s data to a flat file in a dynamic way. The other steps are now very straight forward.

“Create File” step will be as simple as this:

create table "<@=folder_path@>"

<@=table_metadata@>

Same for “Truncate File”:

truncate table "<@=folder_path@>"

<@=table_metadata@>

“Create Header”:

create header (<@=table_header@>)

<@=table_metadata@>

“Insert Rows” is the only one that needs two steps. In Source Command we have:

select

/*+ parallel(64) */ <@=table_header@>

from <@=table_schema@>.<@=table_name@>

where

(1=1)

This one is used to read the source table. In Target Command we have the insert statement to the file object:

insert into "<@=folder_path@>"

(

<@=table_header@>

)

values

(

<@=table_header_parameter@>

)

<@=table_metadata@>

Add this procedure in an ODI package, alongside with the ODI variable for the table name:

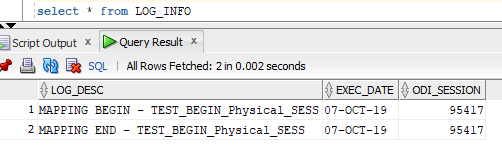

Add the table/view object that you want to dump the records. In this example, I used a table named SAMPLE_DATA. When I executed the package, I have the following:

If I go to the folder path defined in OUTPUT_FILE logical schema, I can see the data file created there (they are all fake/random rows, not real people information):

I hope you liked this post. See you soon!