Hi guys how are you?

Today we’ll continue the dimension and cubes series (Part 1, Part2 and Part 3 here) and we’ll see how to load data using Surrogate keys.

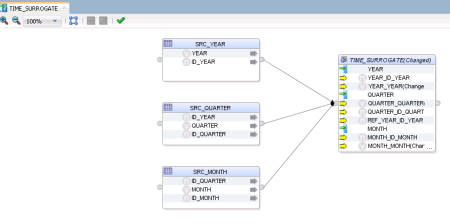

After all the setting done in the last post, now the only thing left is to create the interfaces and map everything. For the Surrogate keys, the interface and the mapping are exactly the same as for no-surrogate version (as we can see in the previous posts) for both, dimensions and facts, what’s very nice.

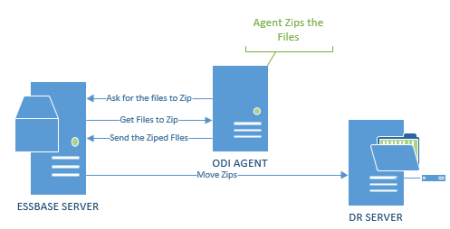

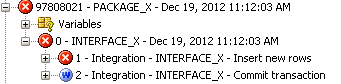

The interesting here is what he does behind the scenes. In the no-surrogate version ODI created one mapping for each hierarchy and in the end it merged everything together inside a table.

The interesting here is what he does behind the scenes. In the no-surrogate version ODI created one mapping for each hierarchy and in the end it merged everything together inside a table.

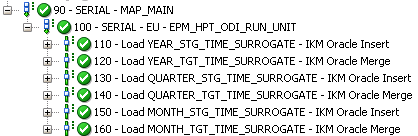

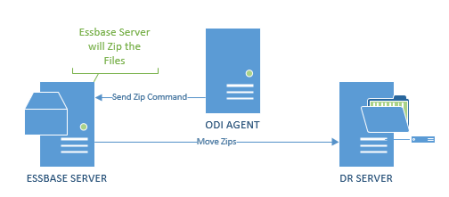

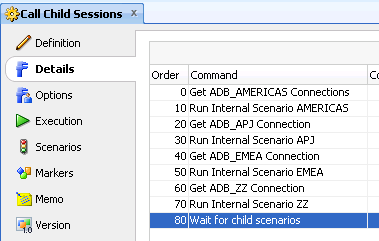

For the Surrogate key version, ODI also generates one mapping for each hierarchy but the main difference is that after each one he merges it witch the others. This happens because he needs to get the surrogate key for each level.

For the Surrogate key version, ODI also generates one mapping for each hierarchy but the main difference is that after each one he merges it witch the others. This happens because he needs to get the surrogate key for each level.

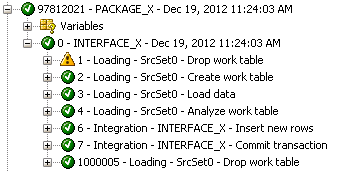

For each level ODI automatically generates an insert into that level stage table verifying if all the columns does not exists in the target table (He does that to decrease the amount of data for the merge step since merge would insert or update everything and would take more time than necessary).

After the stage table is loaded the next step is to merge the stage table to the target table, and for that ODI just create a “Merge”: when match he updates the descriptions or attributes and when doesn’t match it inserts the new rows with the sequences for the SK.

In the next level of the hierarchy ODI repeats the process but joining the Year with the Quarter. ODI will keep doing this for each level mapped until the last one, where instead of having a merge with matches and not matches, he just do a merge with Matches (since he know everything will already be there).

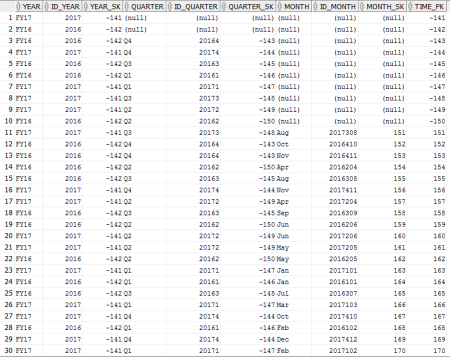

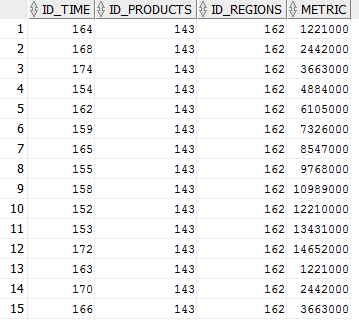

The results will be this:

It’s nice that ODI already creates the dimension thinking in an aggregated fact since we can see that he has some rows just with the year, other with the year and quarters and the last one with all the information.

One thing to notice is that the PK is the same as the Month SK. This is because ODI is ready to create SCD type 2 (we’ll do another post to show how it works).

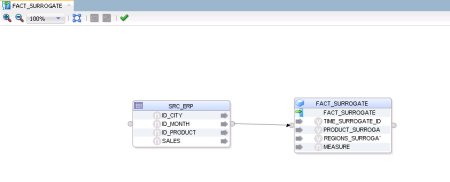

For the fact, the mapping will still be the same as the No-surrogate version and again the difference will be in the results.

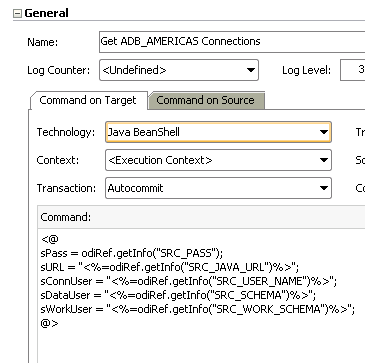

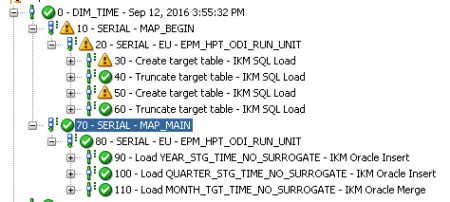

We can see that in the operator ODI does something really neat this time.

MERGE INTO EPM_HPT_ODI_RUN.S_FACT FACT_SURROGATE1_FACT_SURROGATE USING

(SELECT TIME_SURROGATE_FACT_SURROGAT_1.MONTH_SK AS ID_TIME ,

PRODUCT_SURROGATE_FACT_SURRO_1.PRODUCT_SK AS ID_PRODUCTS ,

REGIONS_SURROGATE_FACT_SURRO_1.CITY_SK AS ID_REGIONS ,

SRC_ERP.SALES AS METRIC

FROM ((EPM_HPT_ODI_RUN.SRC_ERP SRC_ERP

LEFT OUTER JOIN

(SELECT TIME_SURROGATE_FACT_SURROGATE.ID_MONTH AS ID_MONTH ,

TIME_SURROGATE_FACT_SURROGATE.MONTH_SK AS MONTH_SK ,

TIME_SURROGATE_FACT_SURROGATE.TIME_PK AS TIME_PK

FROM EPM_HPT_ODI_RUN.S_TIME TIME_SURROGATE_FACT_SURROGATE

WHERE ((TIME_SURROGATE_FACT_SURROGATE.TIME_PK = TIME_SURROGATE_FACT_SURROGATE.MONTH_SK)

AND (TIME_SURROGATE_FACT_SURROGATE.MONTH_SK IS NOT NULL) )

) TIME_SURROGATE_FACT_SURROGAT_1

ON (SRC_ERP.ID_MONTH = TIME_SURROGATE_FACT_SURROGAT_1.ID_MONTH) )

LEFT OUTER JOIN

(SELECT PRODUCT_SURROGATE_FACT_SURROGA.ID_PRODUCT AS ID_PRODUCT ,

PRODUCT_SURROGATE_FACT_SURROGA.PRODUCT_SK AS PRODUCT_SK ,

PRODUCT_SURROGATE_FACT_SURROGA.PRODUCTS_PK AS PRODUCTS_PK

FROM EPM_HPT_ODI_RUN.S_PRODUCTS PRODUCT_SURROGATE_FACT_SURROGA

WHERE ((PRODUCT_SURROGATE_FACT_SURROGA.PRODUCTS_PK = PRODUCT_SURROGATE_FACT_SURROGA.PRODUCT_SK)

AND (PRODUCT_SURROGATE_FACT_SURROGA.PRODUCT_SK IS NOT NULL) )

) PRODUCT_SURROGATE_FACT_SURRO_1

ON (SRC_ERP.ID_PRODUCT = PRODUCT_SURROGATE_FACT_SURRO_1.ID_PRODUCT) )

LEFT OUTER JOIN

(SELECT REGIONS_SURROGATE_FACT_SURROGA.ID_CITY AS ID_CITY ,

REGIONS_SURROGATE_FACT_SURROGA.CITY_SK AS CITY_SK ,

REGIONS_SURROGATE_FACT_SURROGA.REGIONS_PK AS REGIONS_PK

FROM EPM_HPT_ODI_RUN.S_REGIONS REGIONS_SURROGATE_FACT_SURROGA

WHERE ((REGIONS_SURROGATE_FACT_SURROGA.REGIONS_PK = REGIONS_SURROGATE_FACT_SURROGA.CITY_SK)

AND (REGIONS_SURROGATE_FACT_SURROGA.CITY_SK IS NOT NULL) )

) REGIONS_SURROGATE_FACT_SURRO_1

ON (SRC_ERP.ID_CITY = REGIONS_SURROGATE_FACT_SURRO_1.ID_CITY)

) MERGE_SUBQUERY ON ( FACT_SURROGATE1_FACT_SURROGATE.ID_TIME = MERGE_SUBQUERY.ID_TIME AND FACT_SURROGATE1_FACT_SURROGATE.ID_PRODUCTS = MERGE_SUBQUERY.ID_PRODUCTS AND FACT_SURROGATE1_FACT_SURROGATE.ID_REGIONS = MERGE_SUBQUERY.ID_REGIONS )

WHEN NOT MATCHED THEN

INSERT

(

ID_TIME ,

ID_PRODUCTS ,

ID_REGIONS ,

METRIC

)

VALUES

(

MERGE_SUBQUERY.ID_TIME ,

MERGE_SUBQUERY.ID_PRODUCTS ,

MERGE_SUBQUERY.ID_REGIONS ,

MERGE_SUBQUERY.METRIC

)

WHEN MATCHED THEN

UPDATE SET METRIC = MERGE_SUBQUERY.METRIC

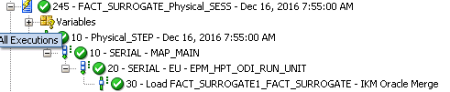

He automatically joins all our dimensions at level zero (since we have the dimensions in the higher levels for the aggregated fact) to get the surrogate key information and use it in the fact table. This is very nice because in large DWs we’ll have tons of dimensions, and map/join everything is very time consuming. The final results is this:

A perfect DW created using surrogate key, in other words, instead of having the dimensions PKs in the fact table we have the SKs (that ware generated by a sequence in the dimensions).

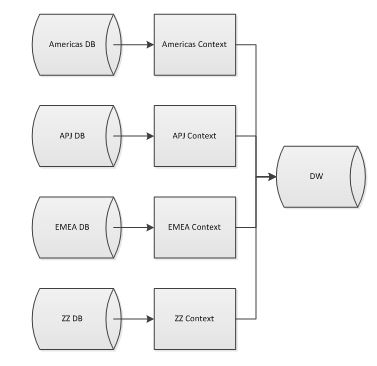

In resume, we think that if you going to create simple dimensions and simple facts (without surrogate key or SCD type 2) it’s still nice to use this new feature since it’s a nice way to document and standardize your DW, but if we measure by development time it’s not worthy since it’s very time consuming for simple DW.

Now, if you want to create a DW using surrogate keys or SCD type 2 we found this new feature extremely useful for both, documentation and standardizations and because is a lot faster than do manually.

Thanks and see you soon.