Hi all, how are you doing? With some delay (same as last year, I was on vacation 🙂 ) we are very happy to announce that DEVEPM will be once again at KScope! We are very honored to be selected to present on the best EPM conference in the word! We got two presentations in, so here is what we are going present at Kscope18:

Dynamic Metadata Integrations for Multiple ASO Applications

Metadata management is a challenge on complex environments, since it is always changing over time. If the metadata process is not build in a robust and dynamic way, we will end up having a lot of rework every time that the business changes.

One of the best ways to maintain metadata on ASO cubes is using ODI, which gives us great flexibility to create real complex enterprise ETL processes. But if we use ODI in its standard way, we will end up having multiple similar ODI objects, since each ASO application/dimension is tied to a particular ODI data store. In other words, the higher the number of ASO applications we have, the higher is the number of ODI objects, increasing the possible failure points and code rework if something changes, which will make us lose a lot of time, money and trust on those systems.

This study case describes how to implement a smart EPM environment that uses Oracle Data Integrator with Oracle Essbase and take full advantage of its potential. This session will show how to create dynamic processes that changes automatically for any number of Essbase applications, allowing metadata maintenance that meets the business needs with low development costs.

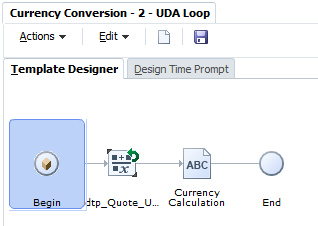

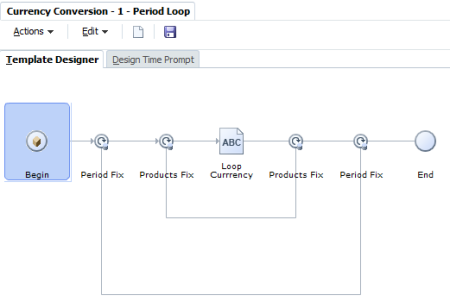

Incredible ODI tips to work with Hyperion tools that you ever wanted to know

ODI is an incredible and flexible development tool that goes beyond simple data integration. But most of its development power comes from outside the box ideas.

- Did you ever wanted to dynamically run any number of “OS” commands using a single ODI component?

- Did you ever wanted to have only one datastore and loop different sources without the need of different ODI contexts?

- Did you ever wanted to have only one interface and loop any number of ODI Objects with a lot of control?

- Did you ever need to have a “Third Command Tab” in your procedures or KMs to improve ODI powers?

- Did you still use an old version of ODI and miss a way to know the values of the Variables in a scenario execution?

- Did you know that ODI has 4 “Substitution Tags”? And do you know how useful they are?

- Do you use “Dynamic Variables” and know how powerful they can be?

- Do you know how to have control over you ODI priority jobs automatically? (Stop, Start and Restart scenarios)

If you want to know the answer of all this questions please join us in this session to learn the special secrets of ODI that will take your development skills to the next level.

Kscope is the largest EPM conference in the world and it will be held in Orlando on June 2018. It will feature more than 300 technical sessions, five symposiums, deep dive sessions, and hands on labs over the course of five days.

Got interested? If you register by March 29th you’ll take advantage of the Kscope early bird rates. Don’t waste more time and let’s be part of the greatest EPM event in the world. If you are still unsure about it, read our post about how Kscope/ODTUG changed our lives! Kscope is indeed a life changer event!