Today we will talk about something very important when we work with Hyperion Planning: its repository.

Today I can say that, if it was not for the Planning repository, 10% of the solutions/issues that I had in my clients should not have been implemented/fixed and 50% the solution/issues should take 10 times more to be implemented/fixed.

Well, as we are talking about integration in this post I will focus on this for now but I promises that I will talk about the repository itself in another time, focusing in Planning development, security and maintenance.

Let us start. First, what is the planning application repository? Basically it is used for manage all existing metadata in an planning application, which means, we can find all forms information, security, dimensions, settings, workflows and other stuffs of an application there and the best part is that we’ll find this in a relational table (normally oracle).

This allow us to easily get any information from any applications and, in an integration perspective, creating smarter integrations.

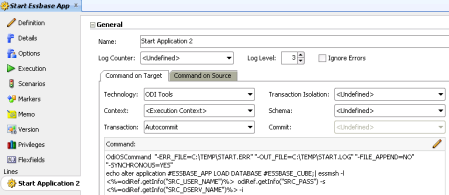

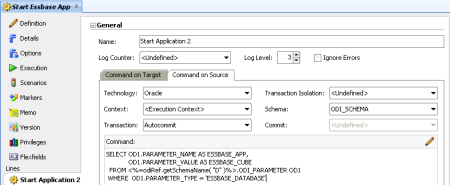

I said that because normally when we create an ODI interface to load metadata we basically use an ODI datastore for the mapping. as a source table to a planning dimension, and then load everything that we have in that table into planning.

However, is this a smart way to load metadata? I can say no. I have a good example in a big client that I’m currently working. They have an entity dimension with more than 23000 members, and in every maintenance cycle, only 1% of those entities changes or are added. That means that only 230 cost centers are new/changed every month. Well, in a normal ODI development, you will load all the 23000 members every month! And it is not only that: my client has seven applications, all of them with more or less the same size. Making the math, we would load more than 160000 members every month and only 1600 were needed to being loaded.

What can we do to solve that? Very simple solution. We only need to know what metadata already exists in the planning applications and compare it with the source metadata repository. The difference from this comparison is what we really need to load.

But how we can do that? With only two steps, one for extract data (that is the focus of this post) and other to compare the data (that we’ll talk in a later post of our ODI series).

Let’s start:

A Planning application repository has a central table where every object that you create in the application is stored. This table is the key source to extract metadata from the applications and its name is HSP_OBJECT. From this table we can get every information needed for the extract process and it makes easy to extract any necessary dimension information we need. In this table, we have six important columns:

- OBJECT_NAME: This column stores the object name;

- OBJECT_TYPE: This column stores the type of the member (Shared Member, Version, Entity, Time Period, Cube, Currency, Member, Planning Unit, Year, Attribute Dimension, Dimension, Alias, Folder, Form, Attribute Member, Group, User, Account, Calendar, Scenario, Currency, FX Table and User Defined Dimension Member);

- OBJECT_ID: This is the ID of the Object;

- PARENT_ID: This is the ID of the objects Parent;

- GENERATION: This informs from what generation the object belongs;

- HAS_CHILDREN: This informs if that object has a children.

We can notice that this table is based in a parent child relationship. This type of relationship is perfect in cases where we want to store a hierarchy inside of a table because in this way we do not need to know how many generations one hierarchy will have to create the table. We only need two columns with the parent and child IDs to rebuild that correlation between then. To achieve that Oracle database give us a very useful command: Connect by Prior … Start With.

The start with .. connect by clause can be used to select data that has a hierarchical relationship and Prior word is used to create recursive-condition. Long story short, everything we need to do is:

This command will retrieve all the Account Dimension hierarchy and if we need to rebuild the metadata from another dimension the only thing that we need to do is change the OBJECT_NAME to the desired dimension name, E.g. Entity. This query will be the core of our extract process. For now, we have the hierarchy built, but only this is not enough, as we need to have all information about the members in order to compare to our inbound table. We need to take the other metadata information from planning repository as well. We need to join all the following tables together to have everything that we need:

|

Repository Table Name

|

Extract Table

|

|

HSP_OBJECT

|

Member

|

|

Parent

|

|

Generation

|

|

Has_Children

|

|

Postion

|

|

HSP_OBJECT_TYPE

|

Dim_Type

|

|

HSP_MEMBER

|

Data Storage

|

|

Data Type

|

|

Two Pass Calculation

|

|

Aggregation (Plan1)

|

|

Aggregation (Plan2)

|

|

Aggregation (Plan3)

|

|

Aggregation (Wrkforce)

|

|

Aggregation (Capex)

|

|

HSP_ALIAS

|

Alias: Default

|

|

HSP_MEMBER_FORMULA

|

Formula

|

|

HSP_DIMENSION

|

Hier_Name

|

|

HSP_STRINGS

|

Description

|

|

HSP_ACCOUNT

|

Account Type

|

|

Time Balance

|

|

Skip Value

|

|

Exchange Rate Type

|

|

Variance Reporting

|

|

HSP_PLAN_TYPE

|

Plan Type (Plan1)

|

|

Plan Type (Plan2)

|

|

Plan Type (Plan3)

|

|

Plan Type (Wrkforce)

|

|

Plan Type (Capex)

|

|

Source Plan Type

|

|

HSP_ENTITY

|

Base Currency

|

|

HSP_ENUMERATION

|

Smart List

|

|

HSP_MEMBER_TO_ATTRIBUTE

|

Associated Attributes

|

|

HSP_UDA

|

UDA

|

This table contains the mapping of the tables and the information that you can find in the application repository. Two important things to say when you build the final extraction query from planning repository:

- Remember that the HSP_OBJECT table has all the metadata information regarding all the objects in planning. We need to join almost all the above tables with themselves again to get the name of that particular object. E.g., HSP_ALIAS only contains the IDs to the alias; the alias itself is stored in HSP_OBJECT.

- Remember to make LEFT JOINS to all those tables against HSP_OBJECT. Depending of the dimension type, we will not have anything stored in some tables. E.g., Account members does not have data stored in HSP_ENTITY table.

With this query ready, all we need to do is loop it passing the Dimension name to the core query mentioned above and it will extract all dimensions from planning.

See Part 2 of our ODI series.

This is it. We only need to compare the extracted planning data to our inbound data and we’ll see that in a future post.

See you soon.