Hey Guys how are you?

Today it’ll be a quick tip for you guys that like/need to query the Planning repository.

The Planning repository stores both the Plan Type and the Consolidation in a very particular way, in fact this is true for a lot of other things like security, form properties etc… but I’ll focus these 2, that are the more often used and the solution is the same for all of them anyways.

If we take a look in the HSP_PLAN_TYPE table we’ll have something similar to this (depending in how many plan types you have in your app).

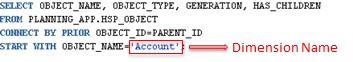

As we can see Planning stores in this table all the plan types that were created when we setup the application. In my case I have 4 plan types and we can have up to 5 BSO plan types in a Planning app. Now, if we join the HSP_OBJECT and the HSP_MEMBER filtering the OBJECT_TYPE = 2 we can take a look in all the dimensions we set in the repository.

The USED_IN columns is the column that says to planning in witch plan type that member will exists. The interesting thing here is that, you don’t see the PLAN_TYPE ID that you supposed to right? And that is because a member can exists in more than 1 plan type right, and if we use the PLAN_TYPE ID straight, we would need one row for each plan type right?

Instead, we have just one row but we also have the ability to tell Planning where that member should exists, and we can do that by summing the PLAN_TYPE ID’s together. In the example above, the Account dimension exists in all 4 plan types (1+2+4+8 = 15). Now the Products dimension exists only in one plan type (1), and by the number you can say that is the Pnl Plan type.

you seen the idea here is to check if a PLAN_TYPE ID exists inside that number we have here in the USED_IN column. Another example is the Employee dimension that has the USED_IN set as 8. The only number that will fit in here is the 8 itself (1+2+4 = 7, 1+8 = 9…) meaning the Plan type is WrkForce.

I think the most used way for us to figure out if a number exists inside another number is to use MOD.

- CASE WHEN MOD(USED_IN,2)>=1 THEN 1 ELSE 0 END PT_1

- CASE WHEN MOD(USED_IN,4)>=2 THEN 1 ELSE 0 END PT_2

- CASE WHEN MOD(USED_IN,8)>=4 THEN 1 ELSE 0 END PT_3

- CASE WHEN MOD(USED_IN,16)>=8 THEN 1 ELSE 0 END PT_4

- CASE WHEN MOD(USED_IN,32)>=16 THEN 1 ELSE 0 END PT_5

The Oracle MOD(N,M) is used to return the remainder of a dividend divided by a divisor where:

| N | Dividend. |

| M | Divisor. |

Then in our case, we need to test if the USED_IN number contains the PLAN_TYPE ID on it, and for that we need to MOD it by rolling sum of the plan types + 1. To make it easier I’ll put that in numbers:

- N = USED_IN = 31 (max number possible)

- M = PLAN_TYPE ID = 1 (Pnl) what I want to test) + 1 = 2

- MODE (31, 2) = 1

- 31/2 = 15 Reminder = 1

- MOD = 1

What that is telling us is that if the MOD is = 1, the Plan type 1 exists in that number. I run a simulation just to show us when the Plan Type 1 does not exists in the USED_IN:

As we can see, the Plan Type 1 only exists in the odd possible results (as expected) what means in any other possible combination of the other 4 plan types he doesn’t exists (2, 4, 2+4=6, 8, 8+2=10, 8+4=12, 8+2+4=14, 16, 16+2=18, 16+4=20, 16+2+4=22, 16+8=24, 16+8+2=26, 16+8+4=28, 16+8+2+4=30).

The same is true for the other Plan types, you can try then out using the MOD. Now, this work well but there’s a way easier and clean way to do exactly the same thing using the function BITAND.

The BITAND function treats its inputs and its output as vectors of bits, the output is the bitwise AND of the inputs. Basically it performs below steps.

- Converts the inputs into binary.

- Performs a standard bitwise AND operation on these two strings.

- Converts the binary result back into decimal and returns the value.

Ok, it looks more complicated now, but the good news is that to use is simpler than it sounds like. The main difference between this function and MOD is that MOD returns a boolean, BITAND return the value you asked if it’s true. Expanding my previous test using BITAND:

As you can see, with BITAND returning the number you asked for instead of 0 or 1 make it possible for us to Join the HSP_PLAN_TYPE with HSP_MEMBER using the USED_IN and the PLAN_TYPE in the BITAND Function as a Join:

As you can see, this is a far better way to split the members by Plan Type. And now we can see that the Dimension Products only exists in the Plan Type Pnl and that Entity exists in 4 different plan types. We don’t need to worry about any mathematics formula to create all our MODs, we just need to Join our Plan Type table with the BITAND of USED_IN by PLAN_TYPE.

The Consolidation is another place where you can use the exactly same thing. Instead of using something like this:

- DECODE(MOD(CONSOL_OP,8),’0′,’+’,’1′,’-‘,’2′,’*’,’3′,’/’,’4′,’%’,’5′,’~’,’6′,’Never’,CONSOL_OP) CON1

- DECODE(MOD(TRUNC(CONSOL_OP/8),8),’0′,’+’,’1′,’-‘,’2′,’*’,’3′,’/’,’4′,’%’,’5′,’~’,’6′,’Never’,CONSOL_OP) CON2

- DECODE(MOD(TRUNC(CONSOL_OP/64),8),’0′,’+’,’1′,’-‘,’2′,’*’,’3′,’/’,’4′,’%’,’5′,’~’,’6′,’Never’,CONSOL_OP) CON3

- DECODE(MOD(TRUNC(CONSOL_OP/512),8),’0′,’+’,’1′,’-‘,’2′,’*’,’3′,’/’,’4′,’%’,’5′,’~’,’6′,’Never’,CONSOL_OP) CON4

- DECODE(MOD(TRUNC(CONSOL_OP/4096),8),’0′,’+’,’1′,’-‘,’2′,’*’,’3′,’/’,’4′,’%’,’5′,’~’,’6′,’Never’,CONSOL_OP) CON5

You can create a parameter table with the values for each kind of consolidation and use BITAND with that info:

I hope you this will be useful for you guys and see you soon.